Optimize AI Models Like a Pro: 10 Techniques for Better Results

Building an AI model is just the start. To make it truly valuable, you need to optimize it. AI model optimization ensures your model runs faster, uses fewer resources, and delivers accurate results. Whether you’re a data engineer working on real-time pipelines or an AI team tackling model drift, these techniques can transform your models into efficient, reliable tools.

In this article, we’ll explore model performance techniques that enhance AI model efficiency and accuracy. From pruning to hyperparameter tuning, we’ll break down each method in simple terms, with examples and tips to help you apply them. Let’s dive into the world of AI model optimization and learn how to make your models shine!

What is AI Model Optimization?

AI model optimization is the process of improving an AI model to make it faster, smaller, and more accurate. It focuses on two goals:

-

Enhancing AI model efficiency: Reducing memory, CPU usage, or inference time to save costs and enable deployment on devices like phones or IoT systems.

-

Improving effectiveness: Boosting accuracy and reliability to ensure better predictions or decisions.

Optimization tackles challenges like model drift, where a model’s performance drops due to changes in data or environments. For example, a recommendation system trained on 2023 shopping data might struggle with 2025 trends. Optimization keeps models relevant and valuable, making them assets for businesses in finance, healthcare, or e-commerce.

Why AI Model Optimization Matters

Optimized models create more value. They:

-

Cost less to run: Efficient models use fewer cloud resources, saving money.

-

Deliver better results: Accurate models improve decisions, like fraud detection or medical diagnostics.

-

Run on more devices: Smaller models work on edge devices, enabling real-time apps like self-driving cars.

-

Combat model drift: Regular optimization ensures models stay effective as data changes.

For data engineers, enhancing AI model efficiency means faster pipelines and smoother deployments.

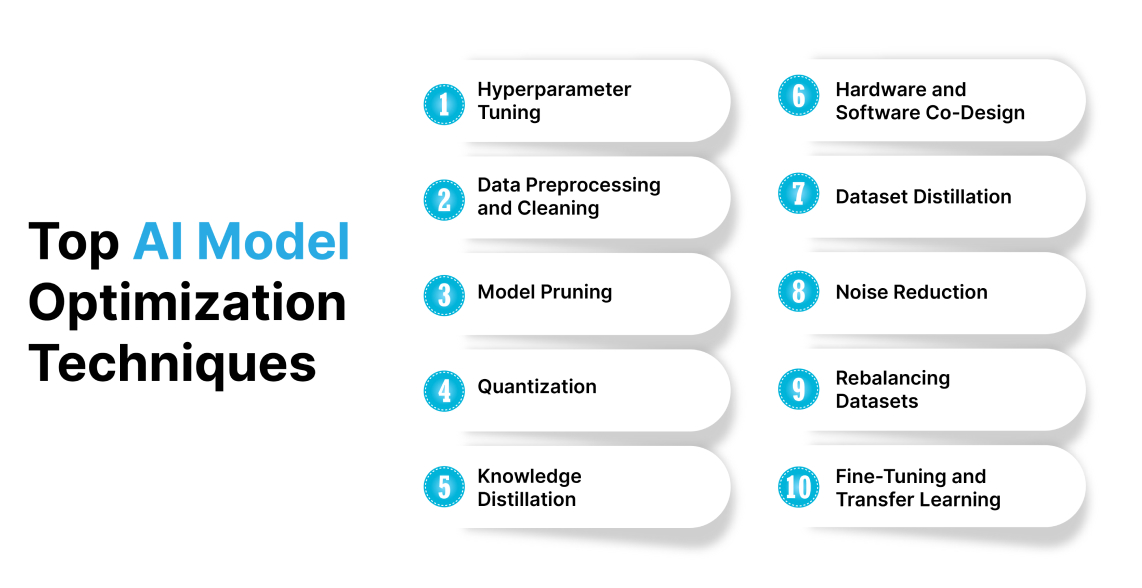

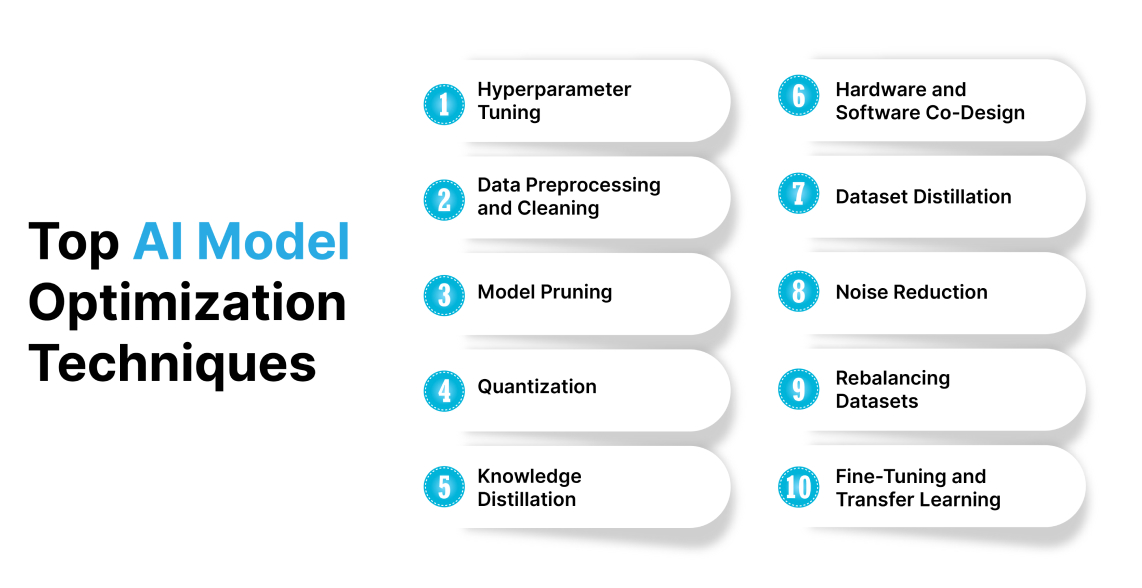

Top AI Model Optimization Techniques

Let’s explore the top model performance techniques.

1. Hyperparameter Tuning

Hyperparameters are settings that control how a model learns, like learning rate or batch size. Tuning them is a key model performance technique for AI model optimization.

How It Works:

-

Adjust settings to find the best combination for accuracy and speed.

-

Common methods include:

-

Grid Search: Tests all possible combinations (slow but thorough).

-

Random Search: Samples random combinations (faster but less exhaustive).

-

Bayesian Optimization: Uses past results to pick better settings (efficient and smart).

Example:

Imagine tuning a model for predicting house prices. A high learning rate might make it learn too fast and miss patterns, while a low rate slows training. Bayesian optimization could find the perfect rate, boosting accuracy.

Why It Matters:

-

Improves model accuracy and convergence speed.

-

Tools like Optuna or Ray Tune automate tuning, saving time.

Pro Tip: Use early stopping to halt training if performance plateaus, preventing overfitting.

2. Data Preprocessing and Cleaning

High-quality data is the foundation of AI model optimization. Cleaning and preprocessing data ensures models learn from accurate, relevant information.

How It Works:

-

Normalization: Scales features (e.g., income, age) to a common range (0-1) to prevent one feature dominating.

-

Handling Missing Values: Fill gaps with means, medians, or advanced methods like k-Nearest Neighbors (KNN).

-

Outlier Removal: Cap or remove extreme values that skew predictions.

-

Data Augmentation: Add variety (e.g., rotate images) to make models robust.

Example:

For a customer churn model, normalize income and age, fill missing contract dates with medians, and remove outliers like negative ages. This can boost accuracy.

Why It Matters:

-

Clean data reduces bias and errors, enhancing AI model efficiency.

-

Speeds up training by removing inconsistencies.

Pro Tip: Visualize data with scatter plots to spot outliers before cleaning.

3. Model Pruning

Pruning removes unnecessary parts of a model, making it smaller and faster without losing much accuracy. It’s a powerful model performance technique.

How It Works:

-

Magnitude-Based Pruning: Cuts weights close to zero, as they contribute little.

-

Structured Pruning: Removes entire neurons or layers for bigger savings.

-

Lottery Ticket Hypothesis: Finds small subnetworks that perform as well as the original.

Example:

A convolutional neural network (CNN) for image recognition might have redundant weights. Pruning them reduces model size, speeding up inference on mobile devices.

Why It Matters:

-

Cuts memory and CPU usage, ideal for edge devices.

-

Maintains accuracy with careful fine-tuning.

Pro Tip: Retrain after pruning to recover any minor accuracy loss

4. Quantization

Quantization reduces the precision of a model’s numbers, shrinking its size and speeding up computations.

How It Works:

-

Converts 32-bit floating-point numbers to 8-bit integers or 16-bit floats.

-

Post-Training Quantization (PTQ): Applies after training, with minimal accuracy loss.

-

Quantization-Aware Training (QAT): Trains with quantization in mind for better results.

Example:

A language model quantized from 32-bit to 8-bit can shrink by 75%, running 2-3x faster on a smartphone for real-time chatbots.

Why It Matters:

-

Enhances AI model efficiency by lowering resource needs.

-

Enables deployment on low-power devices.

Pro Tip: Use QAT for critical tasks to minimize accuracy drops.

5. Knowledge Distillation

Knowledge distillation transfers knowledge from a large “teacher” model to a smaller “student” model, maintaining performance with less complexity.

How It Works

-

The teacher model generates “soft” predictions (probability distributions).

-

The student model learns from these and the original data.

-

Combines distillation loss (mimicking teacher) and classification loss (correct labels).

Example:

A large CNN for medical imaging is distilled into a smaller model, achieving 95% of its accuracy but running on hospital tablets for instant diagnostics.

Why It Matters:

-

Creates lightweight models for edge devices.

-

Enhances AI model efficiency without sacrificing quality.

Pro Tip: Choose a teacher model with high accuracy to maximize student performance.

6. Hardware and Software Co-Design

Optimizing models for specific hardware, like GPUs or TPUs, boosts performance. Co-design aligns software and hardware for maximum efficiency.

How It Works:

-

Use hardware accelerators (e.g., NVIDIA GPUs, Google TPUs) for parallel computations.

-

Frameworks like TensorFlow Lite or PyTorch Mobile optimize for mobile devices.

-

Custom chips (ASICs) can be designed for specific models.

Example:

A fraud detection model optimized for Intel’s OpenVINO toolkit runs 3x faster on Intel CPUs, cutting inference time for real-time banking.

Why It Matters:

-

Speeds up training and inference, enhancing AI model efficiency.

-

Reduces power usage for battery-powered devices.

Pro Tip: Use tools like TensorRT for NVIDIA hardware optimization.

7. Dataset Distillation

Dataset distillation condenses large datasets into smaller ones, speeding up training without losing key information.

How It Works:

-

Creates a compact dataset that captures essential patterns.

-

Removes redundant or noisy data, improving quality.

Example:

A 1TB image dataset for object detection is distilled to 100GB, cutting training time while maintaining accuracy.

Why It Matters:

-

Reduces training costs and time.

-

Improves model focus on relevant data.

Pro Tip: Combine with data augmentation to maintain variety.

8. Noise Reduction

Removing irrelevant or inaccurate data— “noise”—improves model performance and training efficiency.

How It Works:

-

Tools like Granica Signal identify low-value data (e.g., duplicates, outliers).

-

Focuses training on high-relevance samples.

Example:

A hiring model trained on resumes removes duplicate entries and irrelevant fields (e.g., hobbies), boosting fairness and accuracy.

Why It Matters:

-

Enhances AI model efficiency by reducing training time.

-

Improves prediction reliability.

Pro Tip: Use automated tools to detect noise in large datasets.

9. Rebalancing Datasets

Imbalanced datasets skew model predictions toward majority classes. Rebalancing ensures fair and accurate outcomes.

How It Works:

-

Oversampling: Adds copies of minority class data.

-

Undersampling: Reduces majority class data.

-

Synthetic Data: Generates fake data for underrepresented classes (e.g., SMOTE)

Example:

A fraud detection model with non-fraudulent transactions is rebalanced with synthetic fraud cases, improving fraud detection.

Why It Matters:

-

Prevents bias, ensuring equitable predictions.

-

Boosts accuracy for minority classes.

Pro Tip: Monitor class distribution during training to catch imbalances early.

10. Fine-Tuning and Transfer Learning

Fine-tuning adapts pre-trained models to specific tasks, saving time and boosting accuracy.

How It Works:

-

Start with a model trained on a large dataset (e.g., ImageNet, BERT).

-

Adjust layers for your task with a small dataset.

-

Use transfer learning to leverage pre-learned features.

Example:

A general language model is fine-tuned for legal documents, analyzing court rulings in seconds with accuracy.

Why It Matters:

-

Speeds up training with pre-trained knowledge.

-

Improves accuracy with limited data.

Pro Tip: Use a low learning rate during fine-tuning to preserve pre-trained features

Practical Applications of AI Model Optimization

AI model optimization delivers real-world impact across industries:

-

Finance: Quantized fraud detection models run in milliseconds, saving banks millions.

-

Healthcare: Pruned diagnostic models operate on hospital tablets, enabling instant results.

-

E-commerce: Distilled recommendation systems cut cloud costs, personalizing shopping in real time.

-

Manufacturing: Optimized edge models detect defects on production lines, reducing waste.

For data engineers, these techniques streamline pipelines, ensuring models deploy quickly and scale efficiently. Tools like Docker can complement optimization by packaging models for consistent deployment.

Challenges in AI Model Optimization

Common hurdles in AI optimization include:

-

Accuracy Trade-offs: Pruning or quantization may reduce accuracy if overdone.

-

Complexity: Tuning or co-design requires expertise and time.

-

Resource Limits: Large models need powerful hardware for training, even when optimizing.

-

Overfitting: Complex models may memorize data, not generalize.

To overcome these:

-

Use automated tools (e.g., Optuna, TensorRT) to simplify tuning.

-

Monitor metrics like accuracy, latency, and FLOPS during optimization.

-

Combine techniques (e.g., pruning + quantization) for better results.

Tools and Frameworks for Optimization

Several tools make AI model optimization easier:

Open Source

-

Optuna: Automates hyperparameter tuning.

-

TensorRT: Optimizes inference for NVIDIA hardware.

-

ONNX Runtime: Standardizes optimization across frameworks.

Commercial

-

Google Cloud AI Platform: Offers end-to-end optimization pipelines.

-

Granica Signal: Reduces noise and rebalances datasets.

Hardware-Specific

-

Intel OpenVINO: Boosts performance on Intel CPUs.

-

TensorFlow Lite: Optimizes for mobile devices.

These tools save time and ensure enhancing AI model efficiency is achievable, even for smaller teams.

Evaluating Optimization Success

To measure model performance techniques, track:

-

Accuracy: Correct predictions (watch for imbalances).

-

Inference Time: How fast the model responds.

-

Memory Usage: Resource consumption during operation.

-

Throughput: Predictions per second.

-

FLOPS: Computational efficiency.

Compare optimized models against baselines using datasets like ImageNet or GLUE. For example, a quantized model might cut inference time by 50% with a 1% accuracy drop—a worthwhile trade-off for real-time apps.

Future Trends in AI Model Optimization

The future of AI model optimization is exciting:

-

Data Efficiency: Models will learn from smaller datasets, reducing costs.

-

Energy Savings: Green AI will prioritize low-power techniques.

-

AutoML: Automated tools will handle complex optimizations

-

Custom Hardware: Chips like Intel’s Neural Compute Stick will enable edge AI.

Research into hybrid models (neural + symbolic) and federated learning will further enhance AI model efficiency, making AI accessible to more industries.

Getting Started with AI Model Optimization

Follow these steps:

-

Assess Your Model: Check accuracy, inference time, and resource usage.

-

Pick Techniques: Start with pruning or quantization for quick wins.

-

Use Tools: Try Optuna for tuning or TensorRT for inference.

-

Test and Iterate: Compare metrics before and after optimization.

-

Monitor Drift: Retrain regularly to maintain performance.

Real-World Example: Optimizing a Recommendation System

Imagine you’re a data engineer building a recommendation system for an e-commerce site. Here’s how you’d apply these techniques:

-

Clean Data: Remove duplicate user clicks and normalize purchase amounts.

-

Tune Hyperparameters: Use Optuna to find the best learning rate, improving accuracy by 15%.

-

Prune Model: Cut 40% of weights, reducing memory usage.

-

Quantize: Convert to 8-bit integers, speeding up inference by 3x.

-

Distill Knowledge: Train a smaller model to match 95% of the original’s performance.

-

Deploy with Docker: Package the model in a container for consistent cloud deployment.

This optimized system personalizes recommendations in real time, boosting sales while cutting cloud costs.

Conclusion

AI model optimization is essential for creating fast, accurate, and cost-effective models. By using model performance techniques like pruning, quantization, and hyperparameter tuning, you can enhance AI model efficiency and tackle challenges like model drift. Whether you’re

deploying on edge devices or scaling cloud pipelines, these methods ensure your models deliver maximum value.

For data engineers, optimization streamlines workflows and enables real-time applications. Tools like Optuna, TensorRT, and Granica make the process accessible, while platforms like Index.dev offer expert talent to accelerate your projects. Start optimizing today, and turn your AI models into powerful business assets!