AI Models Explained: Types, Examples, and How They Work

Artificial intelligence has transformed the way we analyze data, automate tasks, and drive innovation in nearly every industry. But at the heart of this powerful technology lie artificial intelligence models — special computer programs trained to learn from data and make decisions without direct human input.

If you're curious about AI models, how they work, the types of AI models, and popular examples, you've come to the right place. This comprehensive guide breaks down everything you need to know using clear, simple language to help you understand this fascinating world.

What Are Artificial Intelligence Models?

At a high level, an AI model is a program that’s designed to complete a specific task by learning from data. It mimics some aspects of how humans think and make decisions — but uses computer algorithms and large datasets to do so at incredible speed and scale.

The basic idea is this:

“One or more algorithms analyze large amounts of input data, recognize patterns, and produce outputs like predictions, classifications, or recommendations — often without needing further human intervention.”

These outputs can range from writing emails and recognizing faces in photos to forecasting stock prices or guiding self-driving cars.

The more quality data you provide, and the better the algorithms, the more accurate and useful an AI model can become.

AI, Machine Learning, and Deep Learning: How Are They Related?

The terms artificial intelligence, machine learning, and deep learning are often used interchangeably, but they refer to related yet distinct concepts:

-

Artificial Intelligence (AI): the broad science of making computers simulate human intelligence. AI systems can perform tasks that usually require human cognition like understanding language, recognizing images, and making decisions.

-

Machine Learning (ML): a subset of AI where systems learn from data without being explicitly programmed. ML models improve automatically with experience by identifying patterns and making predictions.

-

Deep Learning (DL): a more specialized branch of machine learning that uses multi-layered neural networks to learn complex patterns in large datasets, often used for image and speech recognition.

Think of AI as the foundation, with machine learning and deep learning as specialized towers built on top to handle specific tasks.

How Do Artificial Intelligence Models Work?

Understanding the inner workings of an AI model can seem complex, but the main steps are:

-

Data Collection: A large dataset related to the problem is gathered.

-

Training: The AI model learns patterns from the data using algorithms — forming connections like neural networks.

-

Validation: The model’s performance is tested and fine-tuned to increase accuracy

-

Prediction: After training, the model makes predictions or decisions based on new, unseen data.

This process is iterative — data scientists continue feeding data and refining algorithms to improve the model’s output.

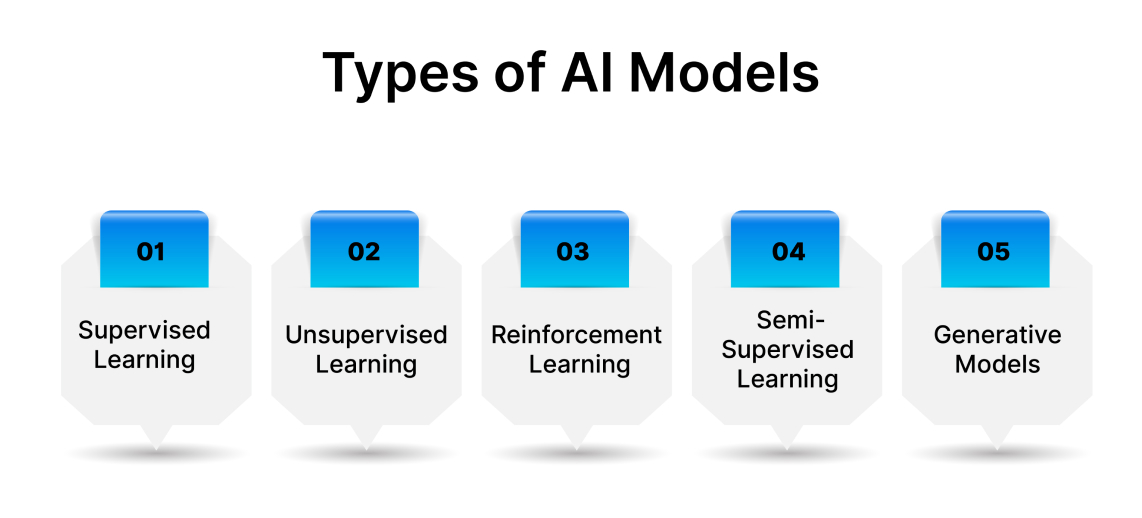

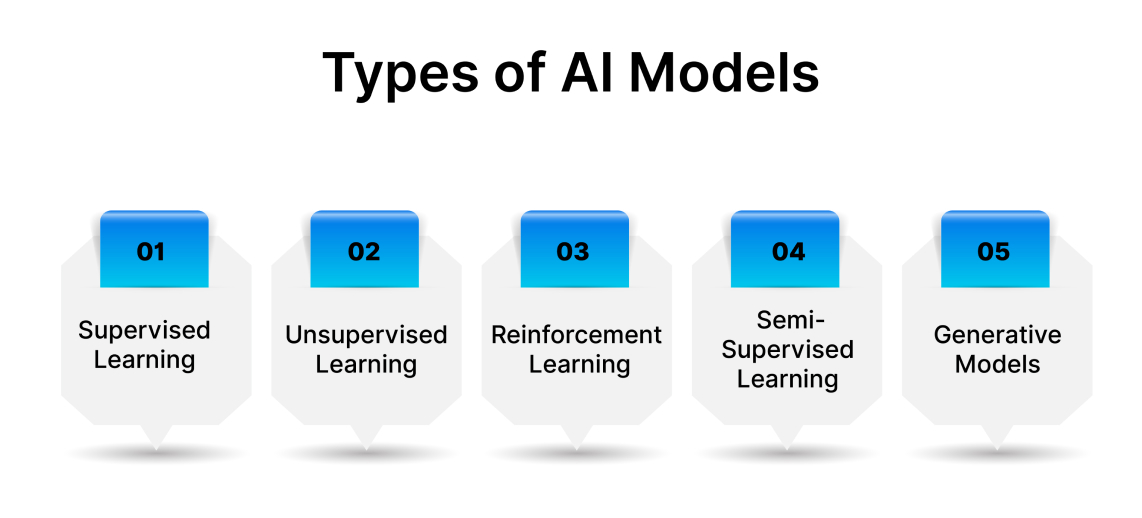

Types of AI Models

AI models come in many shapes and sizes, each suited to different tasks and data. Understanding the main types of AI models is key for anyone interested in AI or data science.

1. Supervised Learning

Supervised learning is like teaching with training wheels. The model learns from labeled data, where inputs are paired with correct outputs (answers). For example, images tagged with the objects they contain.

The model tries to learn the relationship between inputs and outputs so it can accurately predict outputs for new inputs.

Common use cases: spam filters, image classification, fraud detection.

2. Unsupervised Learning

Unsupervised learning has no labeled answers provided. Instead, the AI model must find hidden patterns or groupings in the data on its own.

This is useful for complex data exploration and pattern discovery — like clustering customer groups or anomaly detection.

Common use cases: customer segmentation, trend analysis, data compression.

3. Reinforcement Learning

Reinforcement learning involves teaching an AI model by rewarding or punishing it based on its actions. It learns strategies over time by trial and error to maximize rewards.

It’s useful where models must make sequential decisions, such as in gaming or robotic control.

Common use cases: game playing (e.g., AlphaGo), robotic automation, stock trading.

4. Semi-Supervised Learning

Semi-supervised learning is a hybrid where the model first learns from a small set of labeled data, then refines its knowledge through a large pool of unlabeled data.

This approach can be more efficient since labeled data can be expensive or difficult to get.

5. Generative Models

Generative models produce new data instances that resemble the training data. They learn the underlying data distribution and create outputs like images, text, or music.

Examples include Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs).

Common use cases: synthetic image generation, text generation (like ChatGPT), data augmentation.

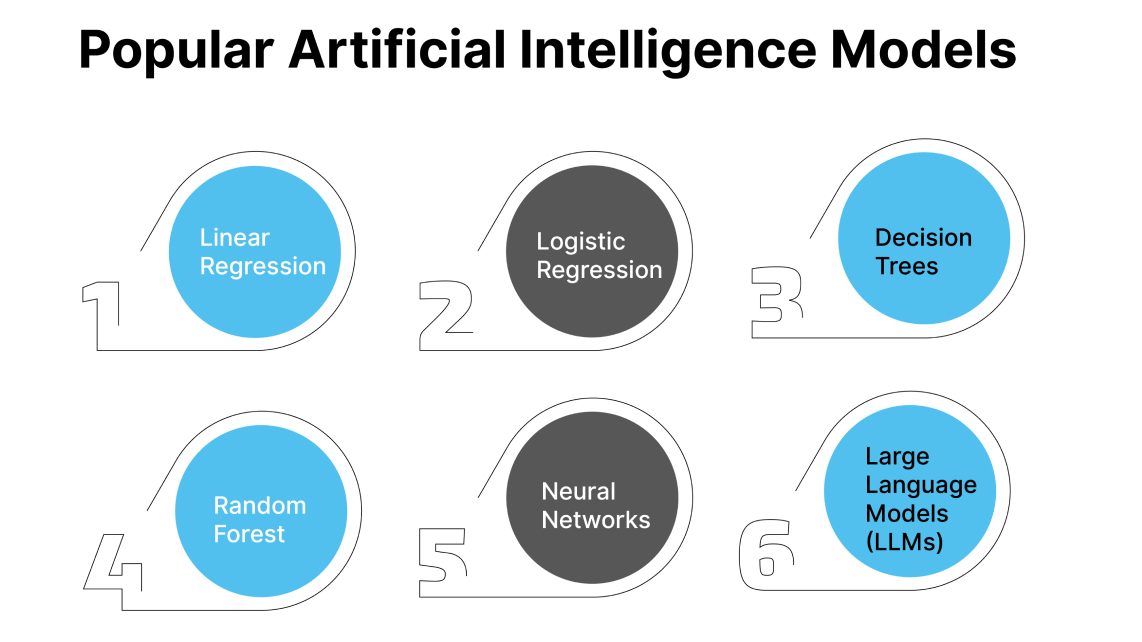

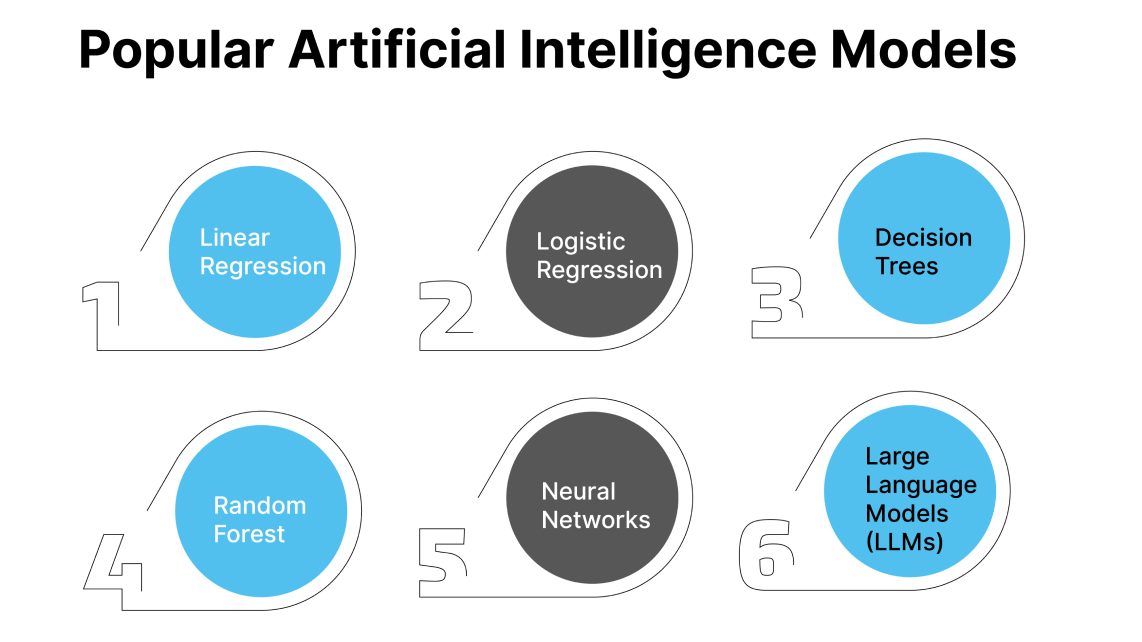

Popular AI Models and Examples

Below are some of the most widely used AI models across many industries:

1. Linear Regression

This statistical model finds the linear relationship between input and output variables. It’s used for predicting numerical values based on past data trends.

Example use: predicting sales based on advertising spend.

2. Logistic Regression

Similar to linear regression but for classification problems where the outputs are categories (e.g., yes/no).

Example use: detecting fraud in credit card transactions.

3. Decision Trees

Decision trees use a flowchart-like structure with "if-then" rules to classify data or predict outcomes based on attribute splits.

Example use: customer churn prediction.

4. Random Forest

This ensemble method uses multiple decision trees working together to improve accuracy and reduce overfitting.

Example use: forecasting customer behavior.

5. Neural Networks

Inspired by the human brain, neural networks consist of interconnected nodes (neurons). Deep neural networks have many layers that enable complex pattern recognition.

Example use: voice recognition, image classification.

6. Large Language Models (LLMs)

LLMs are deep learning models trained on massive text datasets to understand and generate human language. Examples include OpenAI’s ChatGPT.

Example use: chatbots, automatic text summarization, language translation.

How AI Models Are Trained

Training an AI model involves several steps to ensure it learns effectively and performs well:

-

Gather Data: The foundation is having large, quality datasets that are relevant to the task.

-

Clean Data: Removing errors, inconsistencies, and irrelevant information to improve learning.

-

Choose Model Type: Selecting the best AI model type based on the problem and data.

-

Train The Model: Feed the data into the model and allow it to adjust internal parameters.

-

Validate and Test: Evaluate the model’s accuracy using separate data. Adjust as needed.

-

Deploy the Model: Integrate into real-world applications and monitor its ongoing performance.

Training may require multiple iterations and tuning to avoid issues like overfitting — when a model performs well on training data but poorly on new data.

Challenges in AI Model Training

Developing effective AI models isn’t without challenges. Some common difficulties include:

Data Bias

Data bias occurs when the data used to train an AI model is not representative of the real-world scenarios the model will encounter. This can lead to biased or unfair outcomes, where the model performs well for some groups but poorly for others. For example, if a facial recognition model is trained primarily on images of light-skinned individuals, it may struggle to accurately recognize faces of people with darker skin tones.

Causes of Data Bias

Data bias can arise from several sources:

-

Sampling Bias: If the data collected is not diverse enough, it may not capture the full range of scenarios. For instance, if a healthcare model is trained only on data from one demographic group, it may not perform well for others.

-

Labeling Bias: Human error in labeling data can introduce bias. If the people labeling the data have their own biases, these can be reflected in the training data.

-

Historical Bias: If the data reflects historical inequalities or prejudices, the model may perpetuate these biases. For example, if a hiring algorithm is trained on past hiring data that favored certain demographics, it may continue to favor those groups.

Mitigating Data Bias

To address data bias, organizations can take several steps:

-

Diverse Data Collection: Ensure that the training data includes a wide range of examples from different demographics, backgrounds, and scenarios.

-

Regular Audits: Conduct regular audits of the data and model outputs to identify and correct biases.

-

Bias Detection Tools: Use tools and techniques designed to detect bias in AI models, allowing for adjustments before deployment.

Overfitting

Overfitting occurs when an AI model becomes too tailored to the training data, capturing noise and outliers rather than the underlying patterns. This means the model performs exceptionally well on the training data but poorly on new, unseen data.

Signs of Overfitting

Some signs that a model may be overfitting include:

-

High Training Accuracy, Low Validation Accuracy: If the model shows high accuracy on training data but significantly lower accuracy on validation or test data, it’s a strong indicator of overfitting.

-

Complexity of the Model: More complex models (with many parameters) are more prone to overfitting, especially if the training dataset is small.

Preventing Overfitting

To prevent overfitting, data scientists can employ several strategies:

-

Simplifying the Model: Use simpler models with fewer parameters to reduce the risk of overfitting.

-

Regularization Techniques: Apply regularization methods, such as L1 or L2 regularization, which add a penalty for complexity to the loss function.

-

Cross-Validation: Use techniques like k-fold cross-validation to ensure the model generalizes well across different subsets of the data.

-

Early Stopping: Monitor the model’s performance on a validation set during training and stop training when performance begins to degrade.

Computational Resources

Training large AI models requires significant computational power and storage. The complexity of the algorithms and the volume of data can strain available resources, leading to longer training times and increased costs.

Challenges with Computational Resources

Some challenges related to computational resources include:

-

High Costs: Accessing powerful hardware, such as GPUs or TPUs, can be expensive, especially for small organizations or startups.

-

Time Constraints: Training large models can take a long time, delaying deployment and making it difficult to iterate quickly.

-

Infrastructure Limitations: Not all organizations have the necessary infrastructure to support large-scale AI training, which can limit their ability to develop advanced models.

Addressing Resource Challenges

To address these challenges, organizations can:

-

Cloud Computing: Utilize cloud services that offer scalable computing resources, allowing organizations to pay for only what they need.

-

Distributed Training: Implement distributed training techniques that allow models to be trained across multiple machines, speeding up the process.

-

Optimizing Algorithms: Use more efficient algorithms that require less computational power while still delivering accurate results.

-

Explainability: Complex models (especially deep learning ones) can act as "black boxes" that are hard to interpret.

-

Continuous Updating: AI models often need retraining as new data and situations emerge.

Future of AI Models

Advances in AI model training are rapidly accelerating. Exciting future possibilities include:

Transfer Learning

Transfer learning is a technique that allows an AI model trained on one task to be reused for a different but related task. Instead of starting from scratch, data scientists can leverage the knowledge gained from a pre-trained model, significantly reducing the time and resources needed for training.

How Transfer Learning Works

In transfer learning, a model is first trained on a large dataset for a specific task. Once it has learned the essential features and patterns, the model can be fine-tuned or adapted to a new task with a smaller dataset. This process involves:

-

Freezing Layers: In many cases, the earlier layers of a neural network, which capture general features, are kept unchanged (frozen), while the later layers are retrained to adapt to the new task.

-

Fine-Tuning: The model is then fine-tuned using the new dataset, allowing it to adjust its parameters to better fit the specific requirements of the new task.

Benefits of Transfer Learning

Transfer learning offers several advantages:

-

Time Efficiency: It significantly reduces the time required to train a model, as the foundational knowledge is already established.

-

Resource Savings: Organizations can save on computational resources since they do not need to train a model from scratch.

-

Improved Performance: Models that utilize transfer learning often achieve better performance, especially when the new dataset is small or lacks diversity.

Real-World Applications of Transfer Learning

Transfer learning is already being used in various fields:

-

Natural Language Processing (NLP): Models like BERT and GPT-3 are pre-trained on vast amounts of text data and can be fine-tuned for specific tasks like sentiment analysis or question answering.

-

Computer Vision: Pre-trained models like ResNet and VGG can be adapted for tasks such as object detection or image classification with minimal additional training.

-

Healthcare: Transfer learning can be applied to medical imaging, where models trained on general images can be fine-tuned to identify specific conditions in medical scans.

More Efficient Training

As AI models grow in complexity and size, the demand for efficient training methods becomes increasingly important. Training large models can be time-consuming and resource-intensive, leading to higher costs and longer development cycles.

Advancements in Efficient Training Techniques

Several advancements are being made to improve the efficiency of AI model training:

-

Algorithm Optimization: Researchers are developing new algorithms that require less data and computational power while maintaining accuracy. Techniques like pruning (removing unnecessary parameters) and quantization (reducing the precision of calculations) can help streamline models.

-

Data Augmentation: By artificially increasing the size of the training dataset through techniques like rotation, flipping, and cropping, models can learn more robust features without needing additional data collection.

-

Batch Normalization: This technique normalizes the inputs to each layer in a neural network, allowing for faster training and improved convergence rates.

-

Adaptive Learning Rates: Using adaptive learning rate methods, such as Adam or RMSprop, allows models to adjust their learning rates dynamically during training, leading to faster convergence.

Benefits of More Efficient Training

Improving training efficiency has several benefits:

-

Reduced Costs: Organizations can save on computational resources and time, making AI development more accessible.

-

Faster Iteration: With quicker training times, data scientists can experiment with different models and parameters more rapidly, leading to faster innovation.

-

Broader Accessibility: More efficient training methods can enable smaller organizations and startups to develop AI models without needing extensive resources.

Real-World Examples of Efficient Training

Efficient training techniques are already being implemented in various industries:

-

Autonomous Vehicles: Companies like Tesla use efficient training methods to process vast amounts of driving data, allowing their models to learn from real-world scenarios quickly.

-

Healthcare Diagnostics: AI models for diagnosing diseases from medical images are trained using efficient techniques to ensure they can be developed and deployed rapidly.

-

Retail Analytics: Retailers use efficient training methods to analyze customer behavior and optimize inventory management, allowing them to respond quickly to market changes.

Business & Real-World Impact of AI Models

Artificial intelligence models are changing how organizations approach problem-solving across industries. They improve:

-

Data Analysis: Making sense of massive datasets quickly and accurately.

-

Automation: Performing repetitive or complex tasks without constant human oversight.

-

Predictions & Forecasting: Helping businesses anticipate trends and customer behavior.

-

Personalization: Crafting individual user experiences and recommendations.

-

Innovation: Accelerating product development, healthcare research, and more.

As AI models become more accessible and powerful, businesses small and large can harness their potential to stay competitive and innovative.

Conclusion

Understanding artificial intelligence models and the types of AI models available is essential for embracing the future of technology. From supervised learning to deep learning, these models offer powerful ways to turn data into actionable insights and automate decision-making.

Popular AI models like decision trees, neural networks, and large language models (LLMs) are already shaping industries and everyday life. Despite challenges in training and deployment, ongoing advances promise even greater capabilities ahead.

Whether you're a business leader, developer, or curious learner, getting familiar with AI models is a great step toward leveraging artificial intelligence’s transformative power for good.