LLMs vs. SLMs: The Rise of Smaller Models Outperforming Large Ones

Language models serve as the cornerstone of enabling machines to comprehend and generate human language. The ongoing debate between LLMs vs SLMs has emerged as a significant point of discussion within the field.

While LLMs like GPT-4 and Claude impress with their massive size and versatility, small language models are proving that smaller can be smarter in specific situations. These efficient AI models are gaining attention for their speed, cost-effectiveness, and ability to handle targeted tasks.

This article explores when and why small language models outperform their larger counterparts, backed by real-world examples and practical insights.

What Are LLMs and SLMs?

Before diving into the comparison, let’s break down the basics.

Large Language Models (LLMs) are the giants of AI. Models like GPT-4, with over 200 billion parameters, or Llama 3 with 70 billion, are trained on massive datasets, think trillions of words from books, websites, and more. This makes them incredibly powerful for tasks like writing essays, coding, or even chatting like a human. Their strength lies in handling complex, open-ended problems with deep contextual understanding.

Small Language Models (SLMs), on the other hand, are the lightweight champions. With parameters ranging from a few hundred thousand to a couple of billion, like Meta’s Llama 3.2-1b or Google’s Gemma 2.2B, they focus on efficiency. Designed for specific tasks, such as customer support chatbots or medical data analysis, small language models use fewer resources and deliver faster results.

The key difference? LLMs are generalists, while SLMs are specialists. This distinction sets the stage for when small language models can outshine the big players.

Why Size Isn’t Everything?

The idea that bigger is better doesn’t always hold true in AI. While LLMs excel in versatility, their size comes with downsides: high computational costs, slow processing, and heavy resource demands. Efficient AI models, such as Small Language Models (SLMs), redefine the paradigm by delivering customized solutions with reduced operational overhead. Here’s why smaller models can win in the right scenarios.

-

Speed and Real-Time Needs: SLMs process data quickly, making them ideal for applications where every second counts, like voice commands in drones or instant customer support.

-

Cost Savings: Training and running LLMs can cost millions, requiring powerful GPUs. SLMs, with their lower resource needs, are budget-friendly, perfect for startups or small businesses.

-

Resource Constraints: SLMs can run on edge devices like smartphones or IoT gadgets, while LLMs need cloud infrastructure, which isn’t always practical.

-

Privacy and Control: Keeping data local with SLMs avoids cloud risks, a big plus in sensitive fields like healthcare or finance.

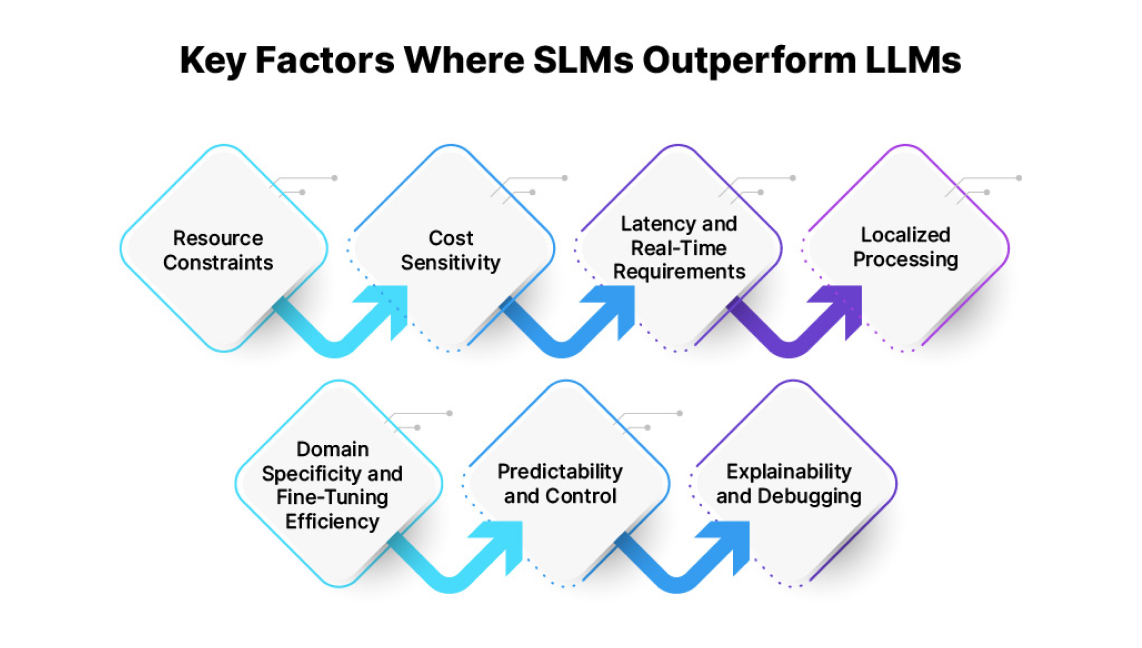

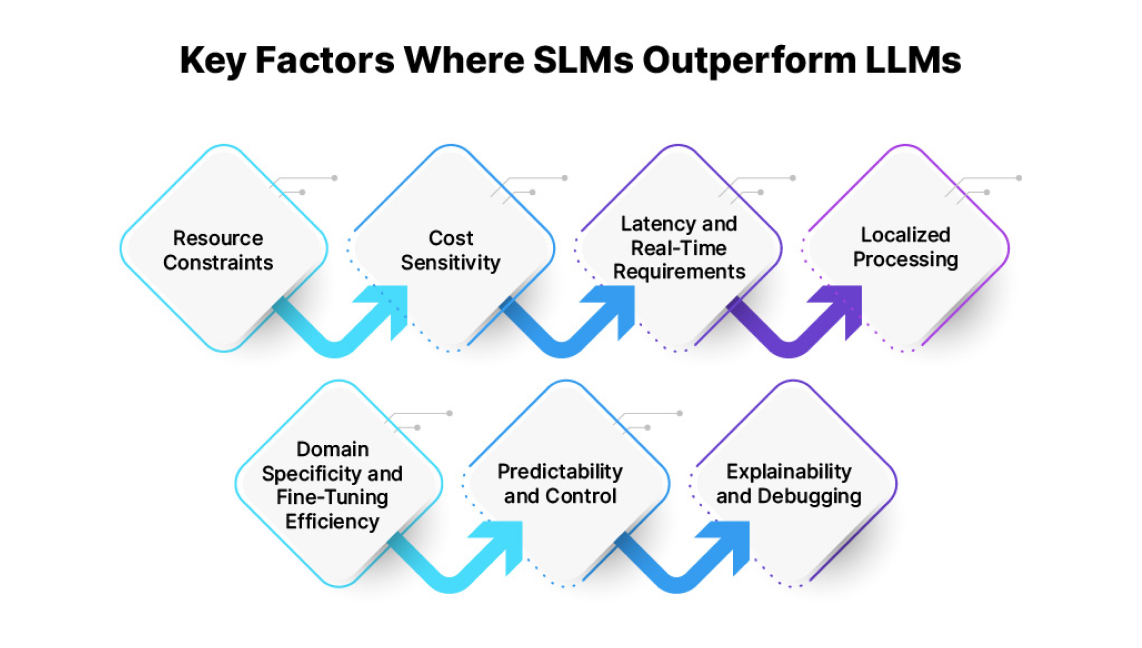

When SLMs Outperform LLMs: Key Factors

Let’s explore the core reasons why small language models can beat LLMs, supported by practical examples.

1. Resource Constraints

In environments with limited hardware, SLMs shine. They can run on devices like Raspberry Pi or smartphones without needing internet or heavy GPUs. For instance, a smart irrigation system in a remote farming area uses an SLM to analyze soil moisture and suggest watering times offline. This saves power and ensures functionality where connectivity is spotty, something an LLM can’t do efficiently.

-

Example: Real-time translation on a budget IoT device in a remote village.

-

Why SLM Wins: Low power needs and local processing beat LLM’s cloud dependency.

2. Cost Sensitivity

For high-volume, low-complexity tasks, LLMs are overkill. A company handling 100,000 daily support ticket tags can use an SLM fine-tuned for the job, running on modest hardware. This cuts costs compared to paying for LLM APIs, making efficient AI models a smart choice for scale.

-

Example: Automating support responses without breaking the bank.

-

Why SLM Wins: Lower training and operational costs suit high-volume needs.

3. Latency and Real-Time Requirements

Speed is critical in time-sensitive applications. A drone landing on voice command needs instant interpretation, which an SLM provides with low-latency processing. LLMs, with their resource-heavy nature, lag behind, making SLMs the go-to for real-time tasks.

-

Example: Interpreting voice commands to land a drone instantly.

-

Why SLM Wins: Faster inference beats LLM’s processing delay.

4. Localized Processing

In privacy-sensitive areas like healthcare, keeping data on-device is a must. An SLM in a rural clinic processes patient queries offline, ensuring data security without cloud reliance. LLMs, needing cloud power, can’t match this level of control.

-

Example: A smart health kiosk operating without internet in a remote area.

-

Why SLM Wins: On-device processing enhances privacy and independence.

5. Domain Specificity and Fine-Tuning Efficiency

SLMs excel when fine-tuned for specific tasks. A legal-tech SLM trained on contract clauses tags compliance issues better than an LLM drowning in general knowledge. With smaller datasets, SLMs adapt faster, saving time and resources.

-

Example: Fine-tuning an SLM on legal contracts for precise clause tagging.

-

Why SLM Wins: Focused training outperforms LLM’s broad approach.

6. Predictability and Control

For structured outputs like invoice summaries, SLMs deliver consistent results, avoiding the creativity LLMs might add. This predictability is crucial in enterprise workflows where accuracy trumps variation.

-

Example: Generating a structured medical summary with fixed formats.

-

Why SLM Wins: Consistent outputs suit structured tasks better.

7. Explainability and Debugging

SLMs are easier to audit due to their smaller size. In a legal-tech app, if an SLM flags a non-compliant clause, experts can trace the decision to training data. Debugging LLMs, with their vast complexity, is like navigating a maze.

-

Example: Tracing a contract clause decision in a legal-tech tool.

-

Why SLM Wins: Simpler architecture aids troubleshooting.

Performance Comparison: SLMs vs. LLMs

Tests across tasks like problem-solving, content generation, coding, and translation highlight SLM strengths. For instance:

-

Problem-Solving: An LLM like GPT-4o solves complex math problems with detailed steps, while an SLM like Llama 3.2-1b struggles, often hallucinating.

-

Content Generation: GPT-4o writes a detailed 2000-word essay, while Llama 3.2-1b falls short at 1500 words with less flow.

-

Coding: GPT-4o produces clean, modular Python scripts, while Llama 3.2-1b misses instructions, creating convoluted code.

-

Language Translation: Both handle French and Spanish to English well, but SLMs are faster due to their size.

SLMs lag in complex tasks but excel in speed and efficiency for simpler, targeted needs.

Advantages of SLMs Over LLMs

Small language models (SLMs) have some clear wins over large language models (LLMs), especially in specific situations. Here’s why they stand out:

-

Domain-Specific Excellence: When SLMs are fine-tuned for a particular field, like medicine or law, they often do better than LLMs. For example, an SLM trained on medical records can spot issues in patient notes more accurately than a general LLM, because it focuses only on that area and learns the details deeply.

-

Lower Maintenance: SLMs don’t need as much care or fancy infrastructure as LLMs. They’re easier to set up and keep running, which saves time and effort for teams. You can deploy them without worrying about constant updates or heavy server costs.

-

Operational Efficiency: SLMs train faster and respond quicker thanks to their smaller size. This makes them excellent with resources—using less power and cutting down wait times, which is great for tasks that need fast results without wasting energy.

Limitations of SLMs

While SLMs have their strengths, they aren’t flawless. Here are some challenges they face:

-

Limited Reasoning: SLMs struggle with tough, multi-step problems or long documents. For instance, they might not figure out a complex math puzzle or summarize a 50-page report as well as LLMs, which can handle deeper thinking.

-

Smaller Context Window: SLMs can only process a few thousand tokens at a time. This means they can’t keep track of long conversations or big chunks of text, limiting how much they can understand or work with at once.

-

Tighter Specialization: SLMs are great at one job but need retraining if you switch to a new area. Unlike LLMs, which can adapt to many topics with a few prompts, SLMs stick to their trained niche and can’t easily handle something different without extra work.

Choosing the Right Model: A Decision Framework

Picking between SLMs and LLMs doesn’t have to be hard. Use this information to decide what’s best for your needs:

-

Speed and Efficiency: Go for SLMs if you’re working in real-time situations or places with limited resources, like a chatbot on a phone or an IoT device in a remote area. They deliver quick answers without needing big setups.

-

Versatility: Choose LLMs if your task is complex or covers many topics, like writing a creative story or analyzing data from different fields. They’re built to handle a wide range of challenges with flexibility.

-

Budget Constraints: SLMs are the way to go if you’re watching costs. They save money on training and running compared to LLMs, which need expensive hardware and cloud support. This makes SLMs perfect for small teams or tight budgets.

The Future of Efficient AI Models

The rise of small language models (SLMs) is changing AI, but it doesn’t mean large language models (LLMs) are outdated. Instead, a new mix is taking shape, combining the deep knowledge of LLMs with the quick, low-cost benefits of SLMs.

Open-source SLMs are leading this change, growing fast to solve specific problems. Unlike large models, they’re built for things like checking health, answering customer

questions, or translating languages in real time. They use less energy and can work on small devices.

As AI keeps getting better, smarter, task-focused models will take over. These new models will focus on being exact and saving resources, using tricks like simplifying designs or reusing learned skills. For example, in healthcare, SLMs could run simple tools to check health in remote areas. In factories, they could improve how supplies move without using too much power. This change will help more companies use AI because it’s cheaper and greener than big models.

Plus, with the ability to keep learning, these models will stay useful as new data and needs come up. This future promises AI that’s strong and practical, matching tech growth with real-life use.

Conclusion

The battle of LLMs vs SLMs isn’t about one winning overall, it’s about fit. Small language models outperform LLMs when speed, cost, privacy, or domain focus matter most. The deployment of efficient AI models across rural clinics and IoT devices demonstrates that effectiveness is not contingent upon size. Businesses can leverage SLMs for practical, real-time needs while reserving LLMs for complex creativity. The future lies in choosing the right tool for the job, making AI work smarter, not just bigger.