Top Machine Learning Algorithms Used By AI Professionals: Explained

Machine Learning and Artificial Intelligence have been deemed the "hot topics" for every trending article in 2021. It's similar to how the internet revolutionized everyone's lives. Artificial Intelligence (A.I.) and Machine Learning will transform our lives in ways labelled impossible years ago.

Machine Learning: What Is It?

In 1959, Arthur Samuel coined the term Machine Learning. He was a pioneer in Artificial Intelligence, computer gaming and Machine Learning.

Machine Learning, in simpler terms, is an application of Artificial Intelligence. It allows devices to learn from each other and improve without a human having to code. For instance, if you've ever seen this on a shopping website: "People who also bought this also viewed", it's because of machine learning.

Why Is ML So Important?

Given the increasing volume and variety of data, computational processing is essential to provide deep-rooted and affordable information. Machine Learning and A.I. enable us to automate models that can analyze larger, more complex data to return faster and more accurate results allowing the organizations to make more informed decisions.

The 3 Types of Machine Learning Algorithms

There are three broad ML/AI algorithm categories: supervised learning, unsupervised learning, and reinforcement learning.

-

Supervised learning can be helpful when a property (label) is available for a particular dataset (training set) but must be predicted for other instances.

-

Unsupervised learning can be helpful when implicit relationships are needed in an unlabeled dataset where items are not pre-assigned.

-

Reinforcement learning is somewhere in between these 2 extremes. There is feedback for every predictive step or action but no precise label or error message.

Supervised Learning Algorithms

1) Decision Trees

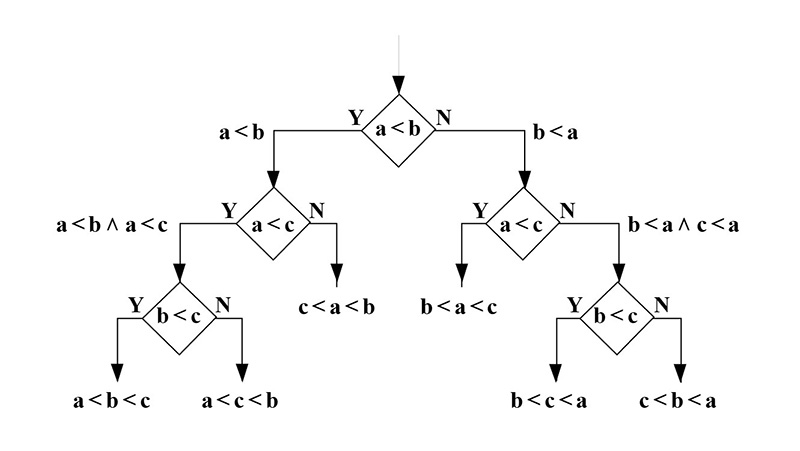

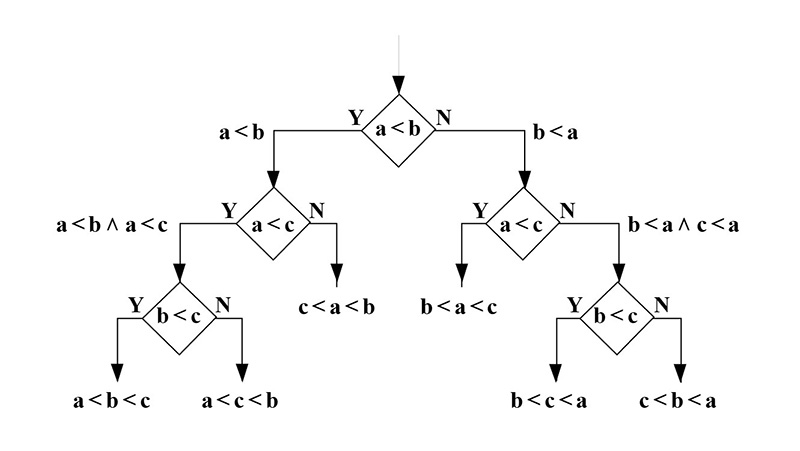

A decision tree is a tool for decision support that uses a tree-like graph of possible outcomes and consequences. This includes chance-event outcomes, utility and resource costs. You can see how it looks in the image.

A decision tree is a very useful tool that helps in making business decisions. Most of the time it is the minimum number possible of yes/no questions one must ask to determine the likelihood of making a good decision. It is a structured & systematic way to approach the problem to reach a logical conclusion.

2) Naive Bayes Classification

The Naive Bayes classifications are a class of probabilistic classifiers that use Bayes' theorem and make strong (naive) independent assumptions between the features.

Some real world examples of naive bayes classifier in action are labeling email as spam or not spam, categorizing articles on a website & identifying whether a text has positive or negative emotion.

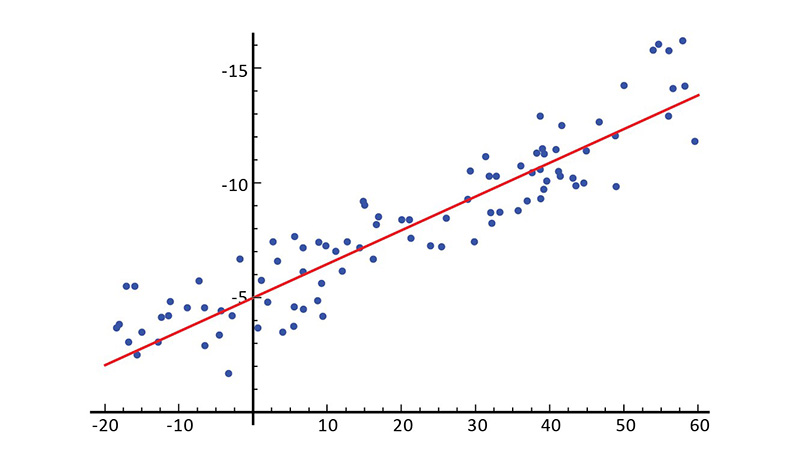

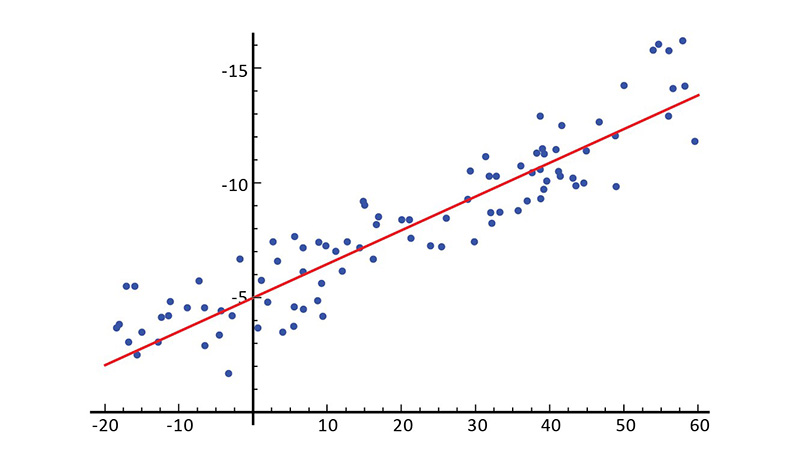

3) Ordinary Least Squares Regression

Linear regression is a commonly used term in statistics. One of the methods for performing linear regression is least squares. In layman terms, linear regression is the process of fitting a straight line through a collection of points. There are many ways to accomplish this task and the "most common least squares" strategy is to draw a line and, for each data point, measure the vertical distance between that line and each point. Add these together, and the fitted line will be the one with the smallest sum of distances.

Linear refers to the type of model that you use to fit the data. However, the least-squares refer to the kind of error metric that you are minimizing.

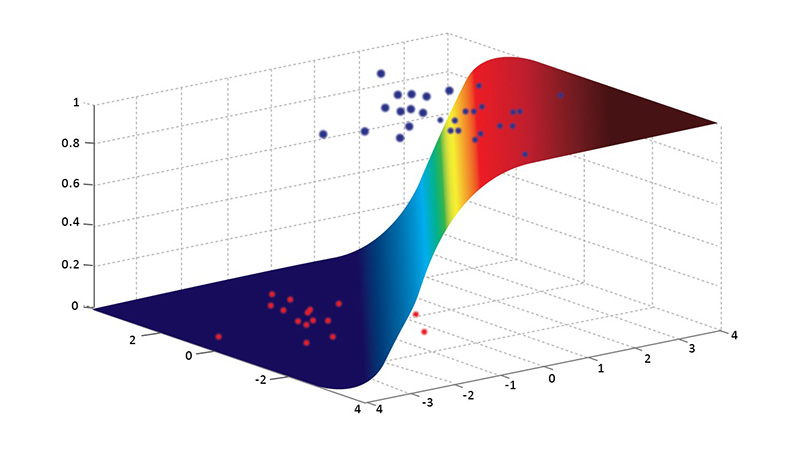

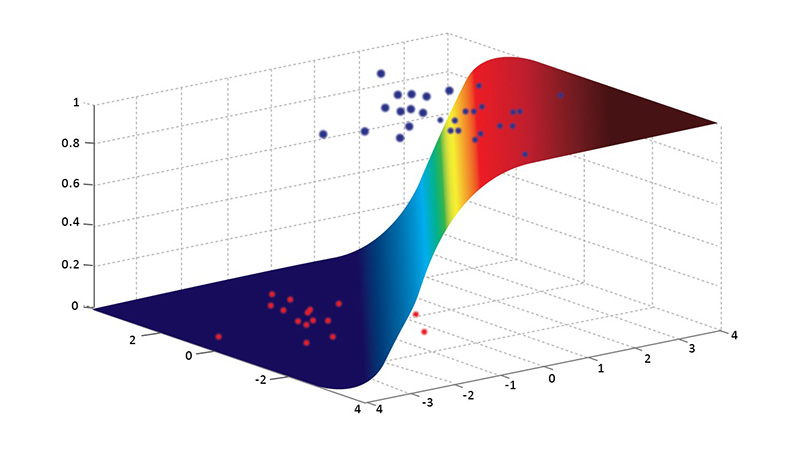

4) Logistic Regression

Logistic regression can model a binomial outcome using one or more explanatory variables. It determines the relationship between the categorically dependent variables and the independent variables. This is done using a logistic function (the cumulative logistic distribution) to estimate probabilities.

Regressions can be used in real-world applications like:

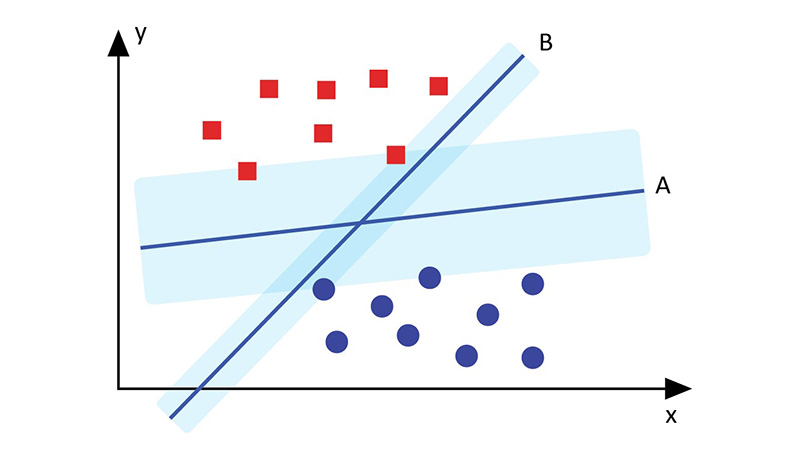

5) Support Vector Machines

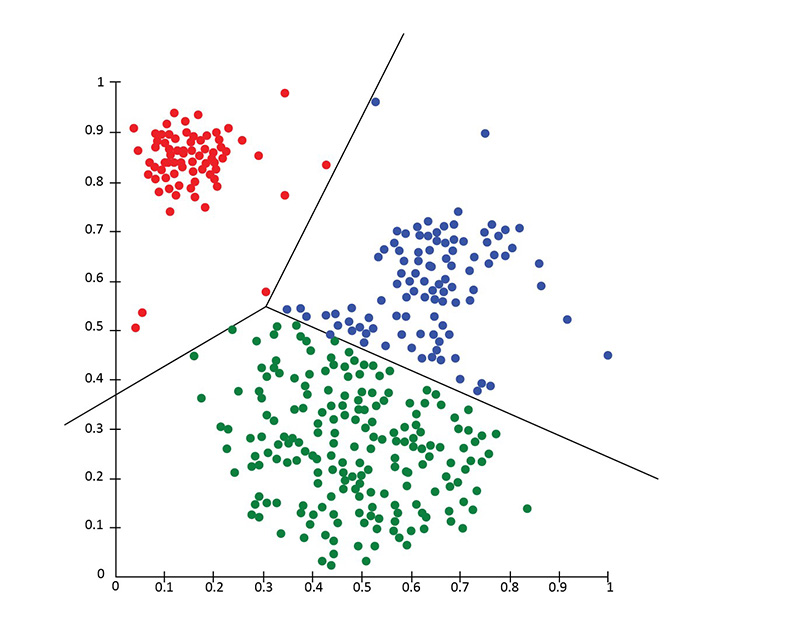

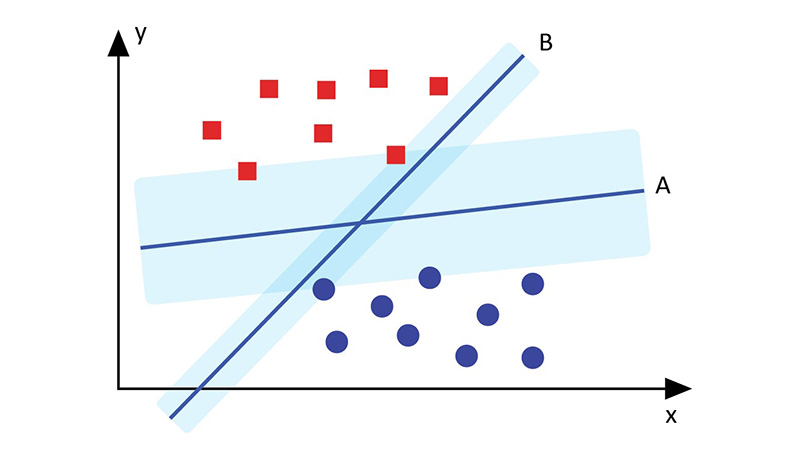

Binary classification ML algorithm SVM generates a N--1 dimensional hyper plane from points of two types in a given N dimensional place. This allows you to break down the points into two groups. Let's say you have 2 types of points in a paper that are linearly separable. SVM will locate a straight line that separates these points into two types which is situated as far away as possible from all of those points.

SVMs have helped solve most of the challenging problems in terms of scale. These include display advertising, human-splice site recognition and image-based gender detection.

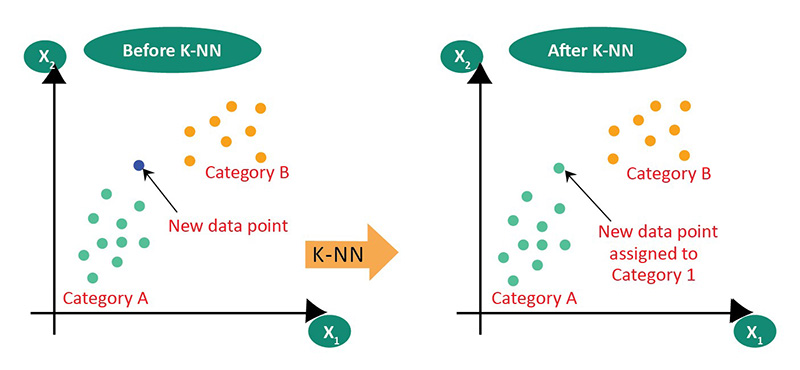

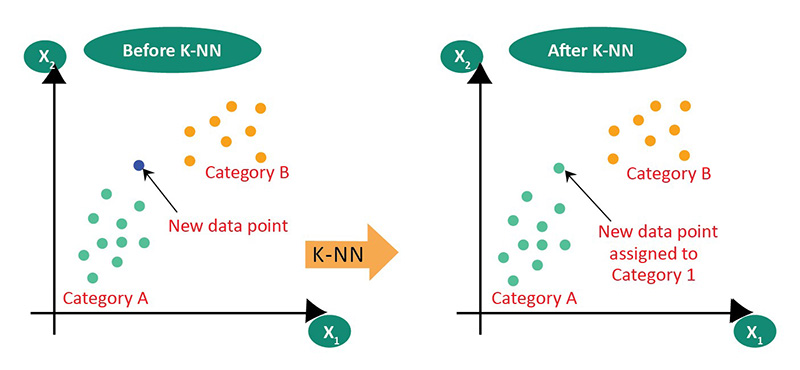

6) K-Nearest Neighbors It is one of the most fundamental and essential classification algorithms in Machine Learning. It is used extensively in intrusion detection, pattern recognition, and data mining. Let's say there are two categories - A & B. Suppose we have a new point x1 and we need to add the data point in one category. K-NN allows us to easily identify the class or category of a dataset. Take a look at the following diagram.

The K-NN selects the number K of the neighbors & then calculates the Euclidean distance of K number of neighbors. It takes the K nearest neighbors as per the calculated Euclidean distance and then counts the number of the data points in each category of these k neighbors. Using this model, it assigns the new data points to that category for which the number of the neighbor is maximum.

7) Random Forest: Random forests are supervised machine-learning algorithms that are built from decision tree algorithms. This algorithm is used in a variety of industries, such as banking and e-commerce to predict behavior and outcomes.

Many decision trees make up a random forest algorithm. The random forest algorithm generates a 'forest' by using bagging or bootstrap aggregating. Bagging is an ensemble meta-algorithm that increases the accuracy percentage of machine-learning algorithms.

The algorithm (random forest), which is based on the predictions made by the decision trees, determines the outcome by calculating the average or median output of different trees. The precision of the result increases as the number of trees increase.

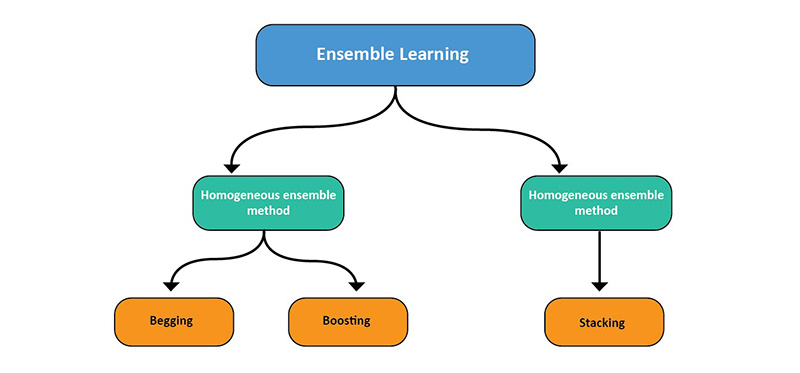

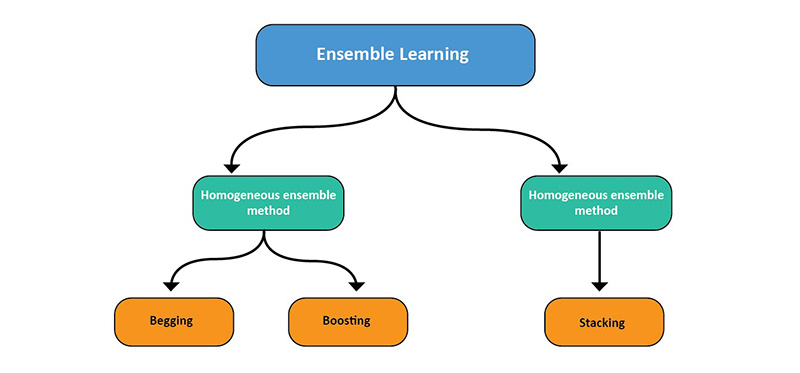

8) Ensemble Methods: Ensemble methods use learning algorithms to create a set of classifiers, then classify new data points using a weighted vote on their predictions. Bayesian averaging was the original ensemble method. However, more recent algorithms include error-correcting output coding, bagging and boosting.

How do ensemble models work? And why are they better than individual models?

-

They can average out biases. If you take some republican-leaning and democratic-leaning polls, you'll get an average that doesn't lean either way.

-

They reduce variance. The combined opinion of several models is less noisy than that of the single opinion of one model. Diversification is a term used in finance and is based on the ideology that including many stocks will be less volatile than a single stock. Your models will perform better if you have more data points than fewer.

-

They won't over fit. Not only do ensemble models improve the performance but also lower the chance of over fitting. Think of someone evaluating the product's performance. A single person might be too focused on one feature or detail and not provide a comprehensive evaluation. However, when a group of people reviews the product, each person might be focused on a different feature. That reduces the chance of focusing too heavily on one feature. As a result, they will make a more generalized assessment at the end. Ensemble models also result in a well-rounded model that reduces the chance of over fitting.

Unsupervised Learning Algorithms

1) Clustering Algorithms

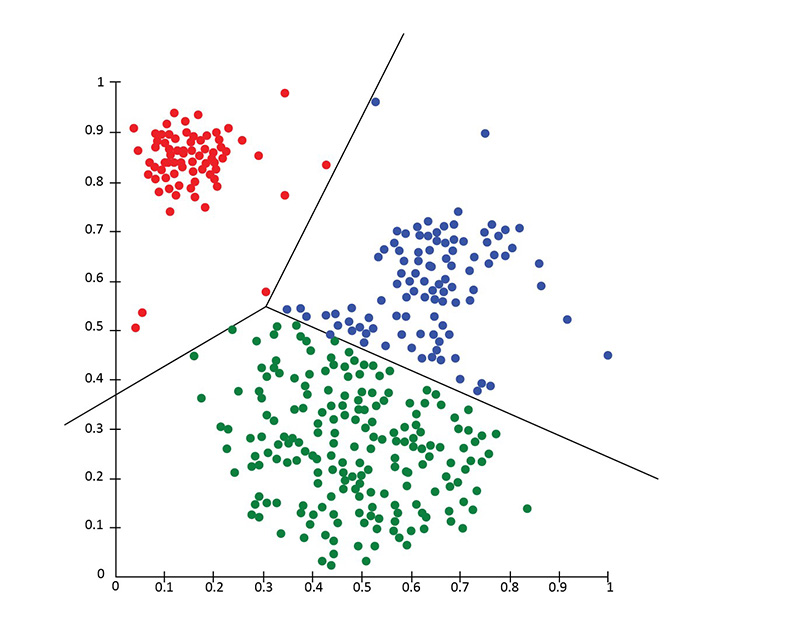

Clustering refers to the process of grouping objects. The objects in the same group or cluster are more similar than objects in other groups.

Each clustering algorithm is unique. Here are some examples:

-

Algorithms based on centroids

-

Connectivity-based algorithms

-

Density-based algorithms

-

Probabilistic

-

Neural networks / Deep Learning

Being an unsupervised learning problem, the clustering approach is used as a data analysis technique that identifies useful data patterns, such as groupings of consumers based on their activity or geography.

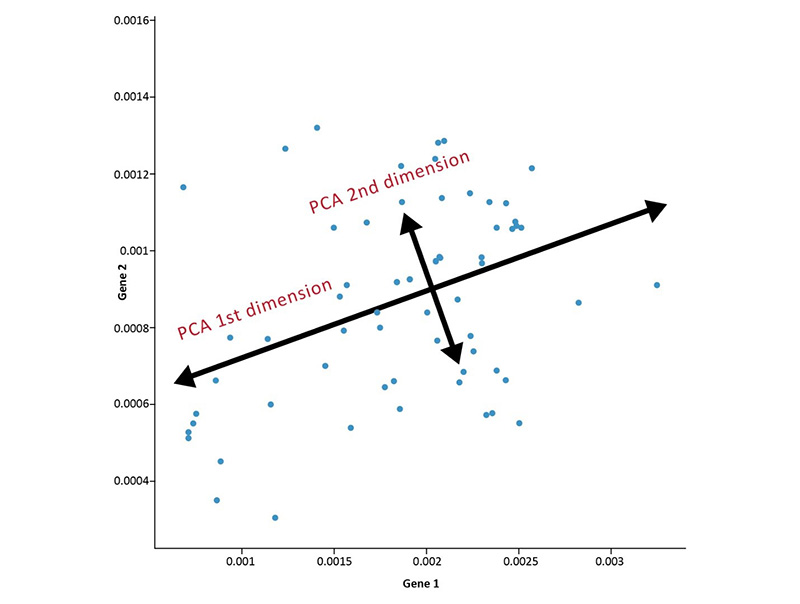

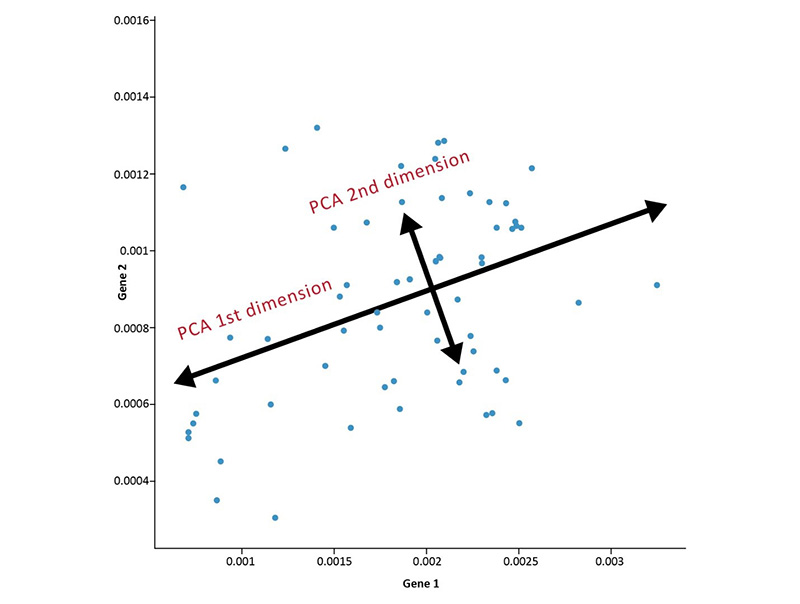

2) Principal Component Analysis:

PCA is a statistical technique that converts a set of observations of potentially correlated variables into a set of values of linearly uncorrelated variables, called principal components.

PCA can simplify data, make it easier for learning, and visualization. It is important to have domain knowledge before deciding whether or not you want to use PCA. It is not recommended in noisy data cases (all components of PCA have a lot of variances).

3) Singular Value Decomposition:

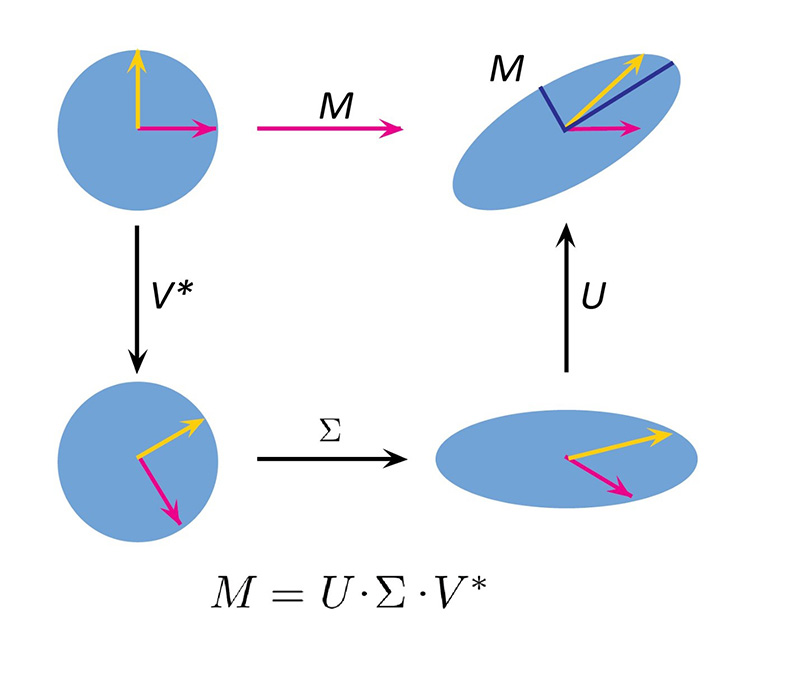

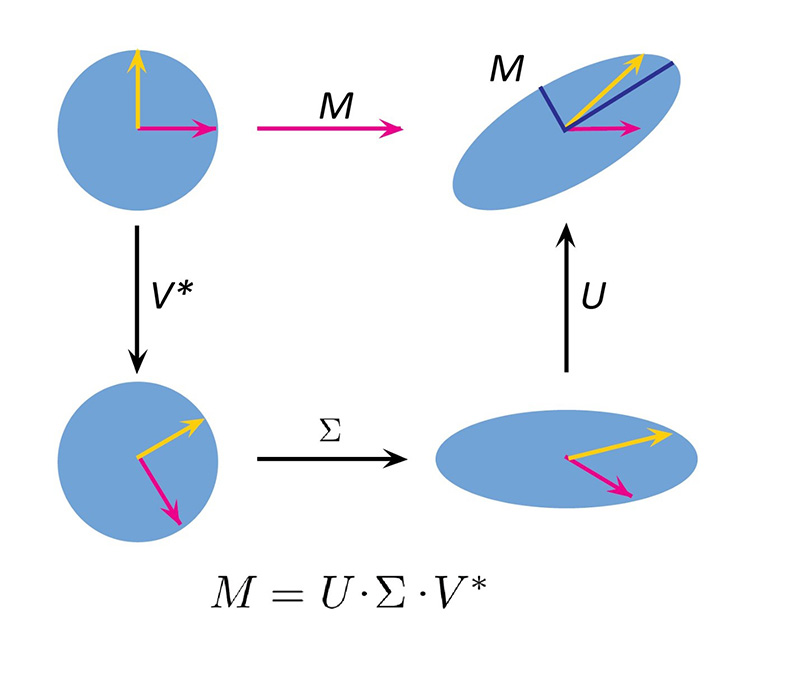

SVD, in linear algebra, is a factorization of a real complex matrix where for a given m*n matrix M there exists a decomposition s.t M = UΣV. Here, U & V are unitary matrices while Σ is a diagonal matrix.

PCA is a simple example of SVD. Computer vision's first face recognition ML algorithms used PCA/SVD to represent faces in a linear combination "eigenfaces". Then, they made dimensionality reduction and matched faces to identities using simple methods. Modern methods are more sophisticated, but many still rely on the similar techniques.

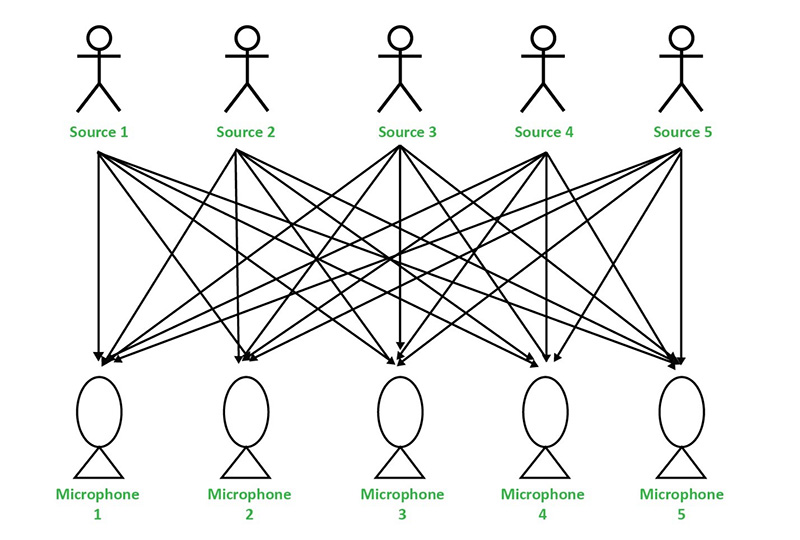

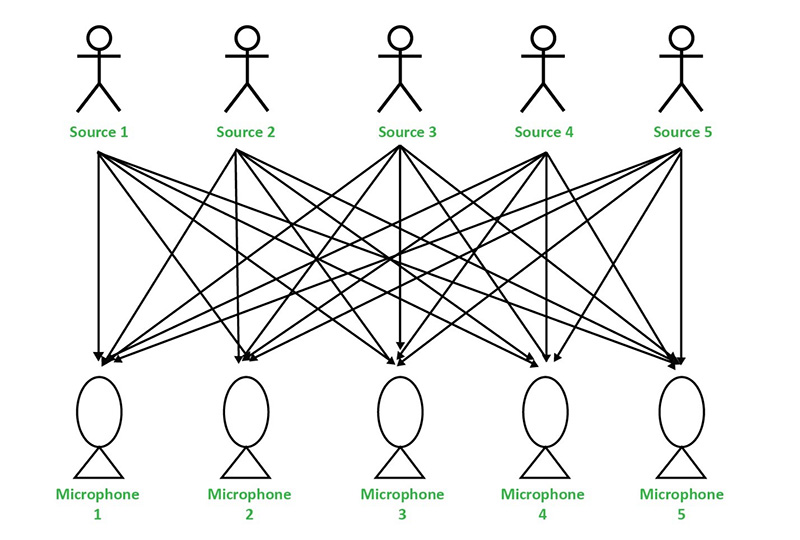

4) Independent Component Analysis:

ICA is a statistical technique that reveals hidden factors. It can be used to reveal the causes of sets of random variables, signals, and measurements. ICA is a generative model that describes multivariate data. It is usually given as a large database of samples.

The data variables in the model are assumed to be linear combinations of unknown latent variables. The mixing system is also unknown. They are called independent components of observed data and are assumed to be non-gaussian & mutually independent.

Although ICA is similar to PCA, it is a more powerful technique capable of discovering the underlying causes of failures when other methods fail. It can analyze digital images, document databases and economic indicators.

Reinforcement learning

Reinforcement learning is a method of learning that focuses on structured learning. A machine learning algorithm is given a set of actions, parameters, and end values. The machine learning algorithm will then explore all possible options, monitor and evaluate each one to determine the best. The machine learns through trial and error with reinforcement learning. It draws on past experiences to improve its response and adapts to new situations to get the best result.

Artificial Neural Networks is a popular reinforcement learning algorithm.

An artificial neural network (ANN) is a collection of 'units' arranged in layers. Each unit connects to the layers on either side. ANNs were inspired by biological systems such as the brain and their information processing. ANNs are interconnected processing elements that work in unison to solve particular problems.

ANNs learn from experience and by example. They are instrumental in modelling non-linear relationships in high dimensional data or when the relationship between the input variables is unclear.

Ending Notes

These are some of the top machine learning algos being used by AI Professionals however there are a lot of things to consider when choosing the best machine learning algorithm for your business's analytics. The efficient use of ML algorithms & your knowledge of A.I. will help you to create machine-learning applications that provide better experiences for everyone.